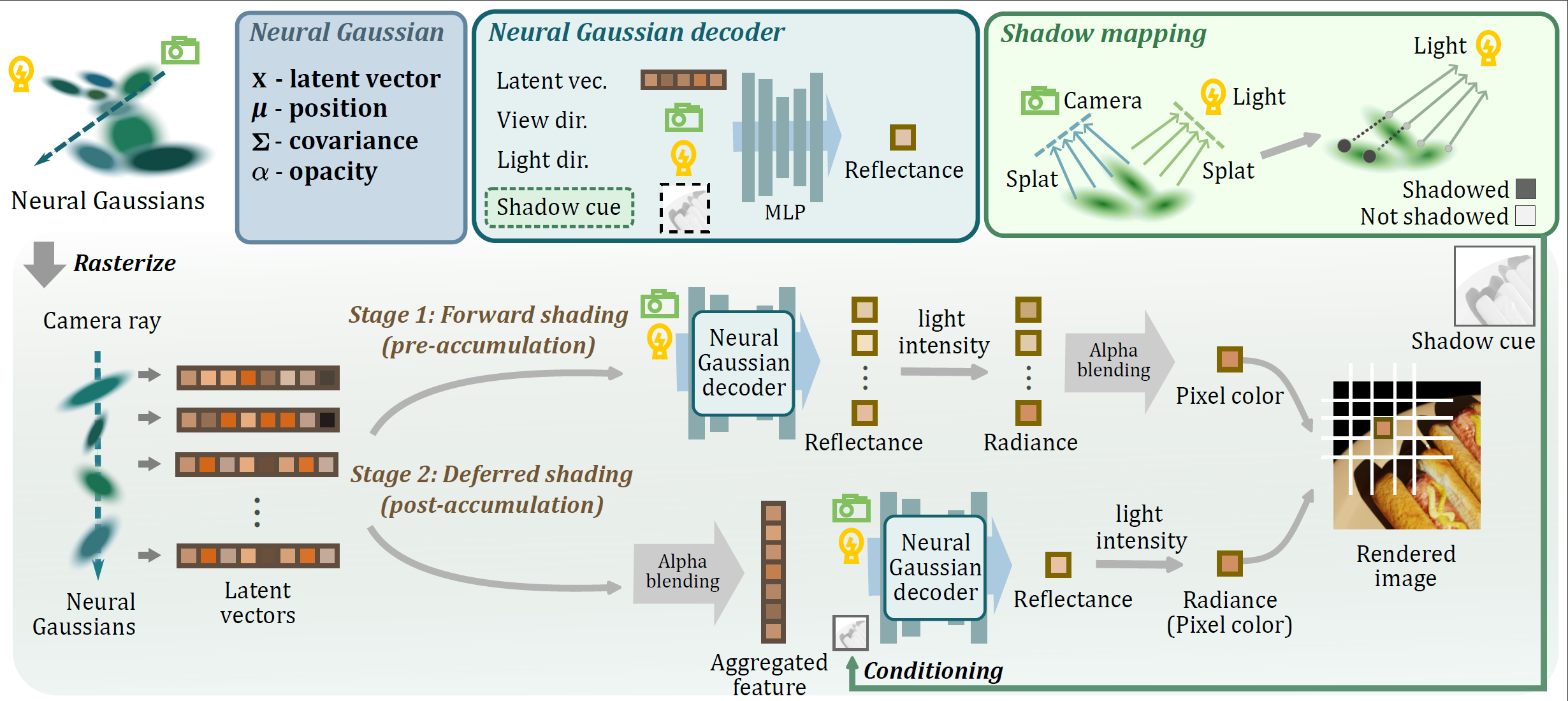

Hybrid Forward-Deferred Pipeline

The overview of RNG. Each Gaussian point in the scene contains an extra latent vector that describes the reflectance. The latent values interpreted by an MLP decoder, conditioned on view and light directions. Training has two stages. In the first stage, we employ forward shading, where we decode all the latent vectors of Gaussian points into colors, followed by the alpha blending. In the second deferred shading stage, we first alpha-blend the neural Gaussian features to get an aggregated feature, and then we feed it to the decoder. We apply shadow mapping to obtain a shadow cue map and use the shadow cue as an extra input for the decoder in the second stage.

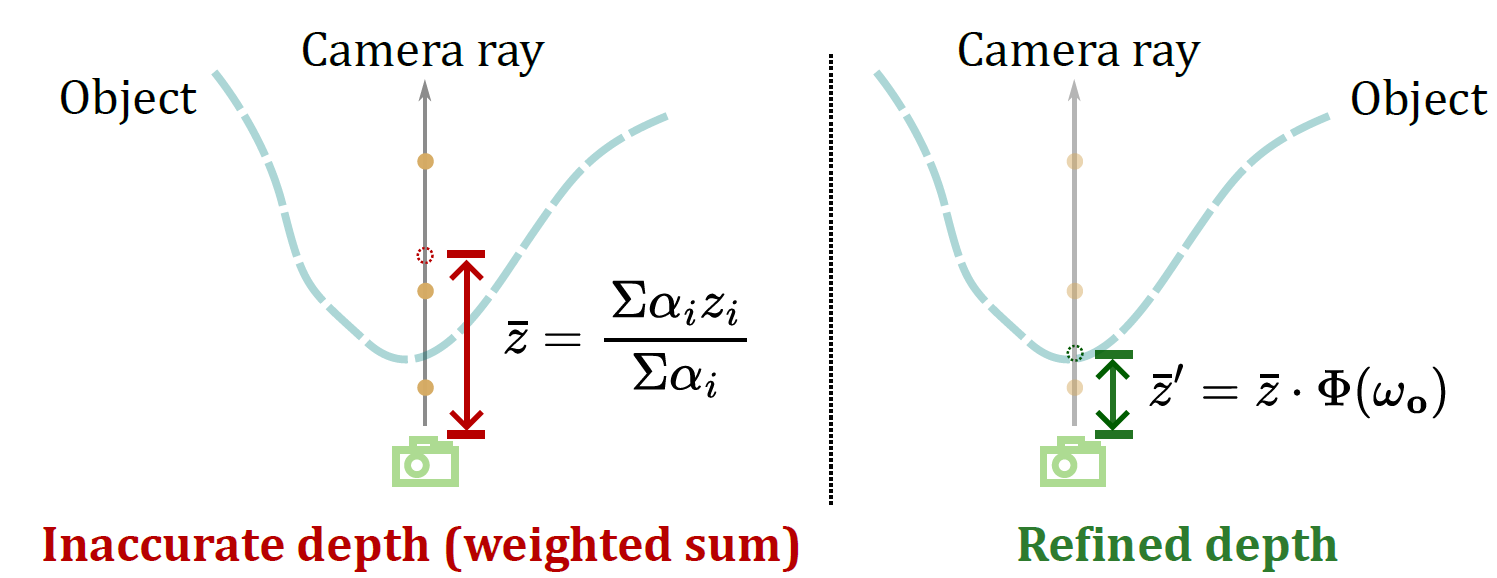

Depth Refinement

The effect of the depth refinement network. The weighted sum of Gaussian depths is not accurate, resulting in mismatching shadow cues. Therefore, we propose a depth refinement network to correct the depth.

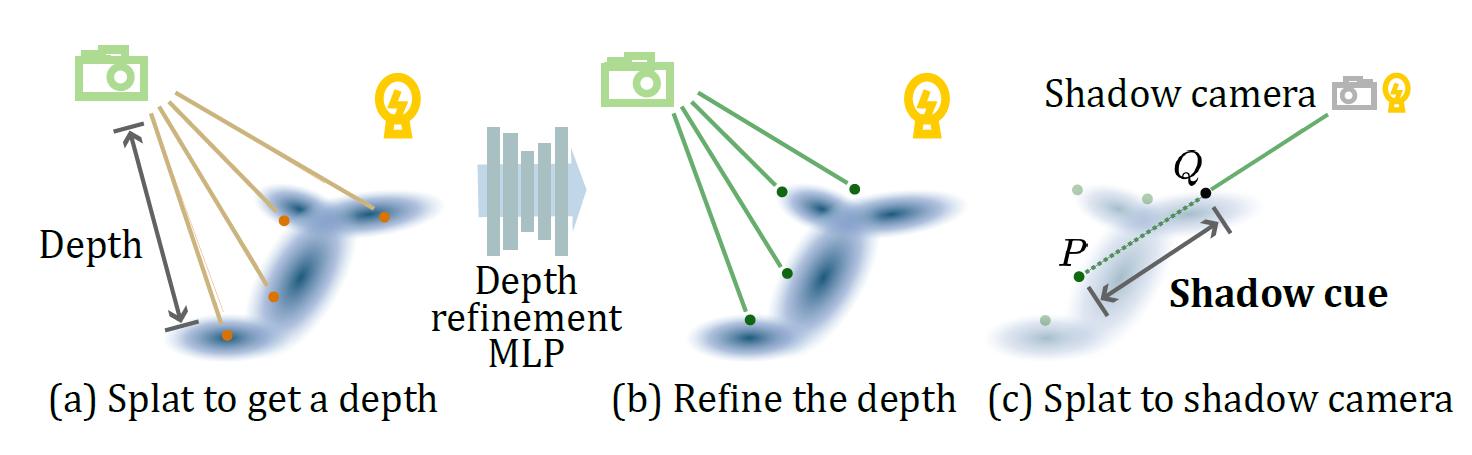

Shadow Mapping

The illustration of shadow cue computation. First, we splat the Gaussians onto the camera to get depth values. Then, we run the depth refinement network to correct them and locate the shading points P. At last, we splat the shading points onto the shadow camera to find the intersections of shadow rays Q, and store the distance |PQ| as the shadow cue.